NVIDIA’s next-generation Rubin AI architecture has emerged as the highly anticipated successor to the dominant Blackwell platform, promising to redefine the landscape of AI computing. Yet, even as the industry buzzes with projections of Rubin’s groundbreaking capabilities, a palpable tension exists: NVIDIA’s unwavering official statements of being ‘on track’ clash sharply with persistent industry rumors of significant delays. This dynamic sets the stage for a high-stakes competitive narrative, particularly as AMD’s aggressive advancements in the AI chip space continue to apply pressure, making the battle for AI supremacy more intense than ever before.

The AI Chip War: NVIDIA’s Rubin vs. AMD’s Instinct

Official Stance: NVIDIA’s Roadmap

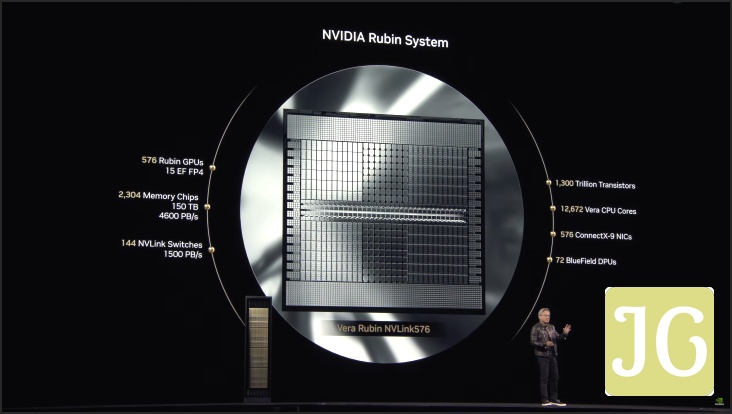

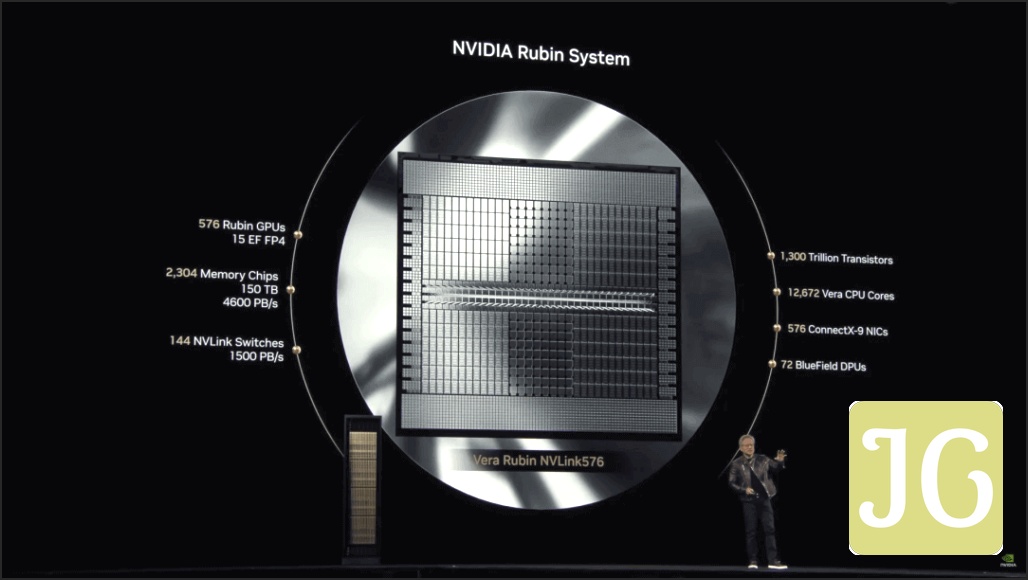

NVIDIA has consistently reaffirmed its commitment to an aggressive annual rhythm for AI infrastructure buildout, a strategy Jensen Huang himself has championed. Following the Blackwell architecture, which is now in full production, and the upcoming Blackwell Ultra (GB300) slated for systems in the second half of this year, the spotlight firmly shifts to Rubin. Officially named in honor of astrophysicist Vera Rubin, this next-generation platform, including the Rubin GPU and Vera CPU, is projected for a market launch in the second half of 2026. The more powerful Rubin Ultra, featuring the Rubin Ultra GPU, is then set to arrive in systems by the second half of 2027. This ambitious roadmap includes key specifications such as TSMC’s advanced 3nm process node, NVIDIA’s first-ever chiplet design, and an upgrade to HBM4 memory. While the memory capacity is expected to remain at 288GB per GPU, consistent with the B300, Rubin is projected to boast a significant bandwidth increase to 13 TB/s and double the NVLink speed compared to Blackwell, promising substantial performance gains and efficiency improvements.

The Rumor Mill: Delays & Redesigns

Despite NVIDIA’s repeated denials to financial outlets like Barron’s and Seeking Alpha, the rumor mill continues to churn with reports suggesting a potential four to six-month delay for the Rubin chip. Sources like Fubon Research and wccftech have fueled these speculations, indicating that the delay could be a direct result of a redesign effort. This rumored redesign is allegedly aimed at countering the competitive threat posed by AMD’s forthcoming MI450, a key component of its MI400 series. While NVIDIA has already completed the first tape-out for Rubin, a second is reportedly planned for late September or October, further hinting at potential adjustments to the timeline. The market remains skeptical, viewing NVIDIA’s denials as predictable pre-earnings PR, especially given the timing of these rumors.

AMD’s Counter-Offensive: The MI400 Threat

AMD’s Instinct MI400 series represents a formidable competitive threat, with rumored specifications that could significantly challenge NVIDIA’s dominance. The MI400 chips are reportedly designed with a highly modular architecture, leveraging AMD’s Infinity Fabric over Ethernet for simpler and more efficient rack deployment. This approach streamlines the integration of multiple accelerators, making large-scale AI infrastructure easier to manage. Crucially, the MI400 is projected to feature an impressive twelve HBM4 stacks per GPU, translating to a massive 432 GB of on-package memory and an astounding 19.6 TB/s of bandwidth. This substantial memory capacity and bandwidth, coupled with AMD’s first rumored rack-scale AI server supporting 72 processors, offers a compelling alternative for data centers and AI developers. It’s these aggressive performance targets and design philosophies that are widely believed to be putting direct pressure on NVIDIA, potentially influencing their Rubin roadmap and forcing strategic adjustments.

Rubin (Projected) vs. MI400 (Rumored) Key Specs

NVIDIA Rubin (Projected)

TSMC 3nm

Chiplet

HBM4

288GB

13 TB/s

Doubled vs. Blackwell

50 PFLOPS

AMD Instinct MI400 (Rumored)

Unspecified (likely advanced)

Modular

HBM4

432 GB (12 HBM4 stacks)

19.6 TB/s

Infinity Fabric over Ethernet

72 processors

The Fandom Reacts: Skepticism, Confidence, and Customer Shifts

The PC gaming community, particularly among AMD enthusiasts, views NVIDIA’s denials of Rubin AI delays with deep skepticism. There’s a prevailing belief that AMD’s competitive advancements are directly forcing NVIDIA into a challenging position, potentially leading to significant customer shifts towards AMD’s MI400 series. This narrative paints a picture of a market in flux, where traditional loyalties might give way to practical considerations of availability and competitive performance.

Emotion: Skepticism & Cynicism

The community largely distrusts NVIDIA’s official statements regarding Rubin’s ‘on track’ status, viewing them as predictable PR maneuvers to control the narrative rather than transparent updates, especially given the timing before earnings.

“I mean if I was Nvidia I would deny the delayed rumors too, especially having to delay to match AMD, and that story hitting right before Nvidia earnings.”

Emotion: Confidence & Anticipation (AMD supporters)

Among AMD supporters, there’s a strong belief that AMD’s competitive pressure is directly impacting NVIDIA, leading to perceived delays for Rubin and a sense of impending market shift.

“I love it. It was sent as a direct shot to NVDA. AMD has far more inside knowledge than MSNBC. This is complete proof to me that Rubin has problems.”

Emotion: Conviction & Excitement (Customer Shifts)

A prevalent community theory posits that Rubin’s rumored delays have already prompted customers to switch orders to AMD’s MI400s, suggesting a tangible competitive gain for AMD.

“**THE CUSTOMERS OF RUBIN WERE NOTIFIED ABOUT THE DELAY AND CALLED AMD TO ORDER MI400s INSTEAD!** That’s why AMD’s tweet and knowledge! Makes sense?”

The Supply Chain’s Silent Battle: TSMC’s Pivotal Role

Beneath the high-profile announcements and competitive posturing lies the silent, yet critical, battle within the supply chain. TSMC stands as the undisputed linchpin, serving as the sole fabricator for NVIDIA’s cutting-edge B200 and upcoming Rubin chips, as well as Tesla’s ambitious Dojo series. The sheer production volume required for these high-performance AI chips hinges entirely on TSMC’s aggressive capacity expansion, particularly for advanced nodes like 3nm and 4nm. While TSMC is investing billions to boost wafer output, the primary bottleneck for both NVIDIA and Tesla’s chip volumes isn’t the wafers themselves, but rather the crucial packaging solutions like CoWoS-L (Chip on Wafer on Substrate-Local). This advanced packaging is essential for the high-density integration needed in these next-generation chips. Ultimately, the ability to secure sufficient and timely CoWoS-L capacity from TSMC is as critical as the architectural innovation itself, determining who can actually deliver on their promises at scale.

Beyond the Benchmarks: Why This Matters for the Future of AI (and Gaming)

This high-stakes competition in AI chip development extends far beyond raw benchmarks and corporate rivalries; it fundamentally underpins the future of artificial intelligence and, by extension, profoundly impacts the gaming world. Advancements in chips like NVIDIA’s Rubin and AMD’s MI400 will serve as the raw computational power fueling the next generation of AI. This includes the rise of sophisticated agentic AI, capable of complex reasoning and multi-step problem-solving, and the burgeoning field of physical AI, which Jensen Huang estimates to be a $50 trillion opportunity poised to revolutionize everything from industrial robotics to autonomous vehicles. For gamers, these developments translate into more sophisticated in-game AI, leading to more dynamic and intelligent NPCs, enemies, and environments. Furthermore, advanced upscaling techniques will continue to evolve, pushing visual fidelity further while maintaining high frame rates, and cloud-based gaming infrastructure will become even more robust and responsive, blurring the lines between local and streamed experiences. NVIDIA, in particular, has strategically shifted its focus from purely training workloads to emphasizing inference, recognizing it will dominate AI workloads in the coming decade, a move that directly impacts the real-time responsiveness and efficiency of AI applications across all sectors, including gaming.

Frequently Asked Questions About the AI Chip Landscape

What is NVIDIA’s ‘annual rhythm’ for chip releases?

NVIDIA is committed to an aggressive annual rhythm for AI infrastructure buildout. This strategy involves introducing new GPUs, CPUs, and accelerated computing advancements each year, ensuring a continuous pipeline of innovation. This includes platforms like Blackwell Ultra, Rubin, and Vera, all designed to drive significant performance gains and efficiency improvements in AI.

How does HBM4 memory impact AI chip performance?

HBM4 (High Bandwidth Memory 4), and its enhanced variant HBM4e for future chips like Rubin Ultra, is crucial for AI chip performance due to its significantly increased bandwidth and capacity. AI models, especially large language models, require immense amounts of data to be processed quickly. HBM4 allows for rapid data transfer between the memory and the GPU, which is vital for efficiently handling the massive datasets and complex computations involved in advanced AI training and inference, preventing bottlenecks that would otherwise cripple performance.

What is ‘Sovereign AI’ and why is it important?

Sovereign AI refers to a nation’s capacity to develop and deploy artificial intelligence using its own domestic infrastructure, datasets, and workforce. This concept, highlighted by NVIDIA at GTC, is pivotal for national innovation, economic competitiveness, and security. It ensures that countries can control their AI capabilities, protect sensitive data, and tailor AI solutions to their unique needs and values without relying solely on foreign technologies or infrastructures.